Advertising agencies have often leveraged the art of computer graphics to promote their brands with much success. With software driving the change, animation & VFX are beginning to take a centerstage in ad-films. Case in point – Gurugram based As Agency Thumba Thumba Private Limited who recently entrusted Wayu Digital Studios to weave an adfilm arond the Indian Shoe Brand – FURO.

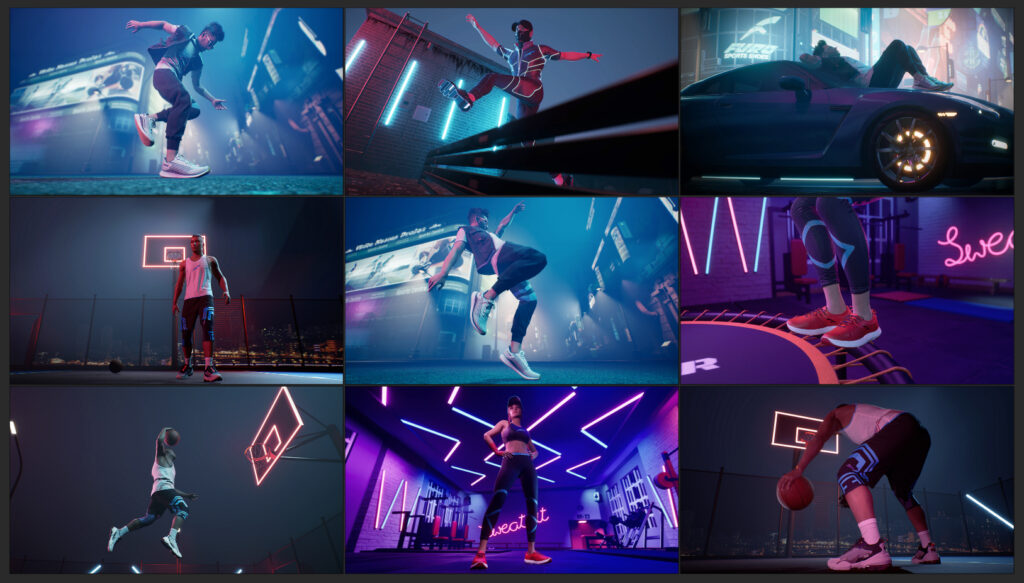

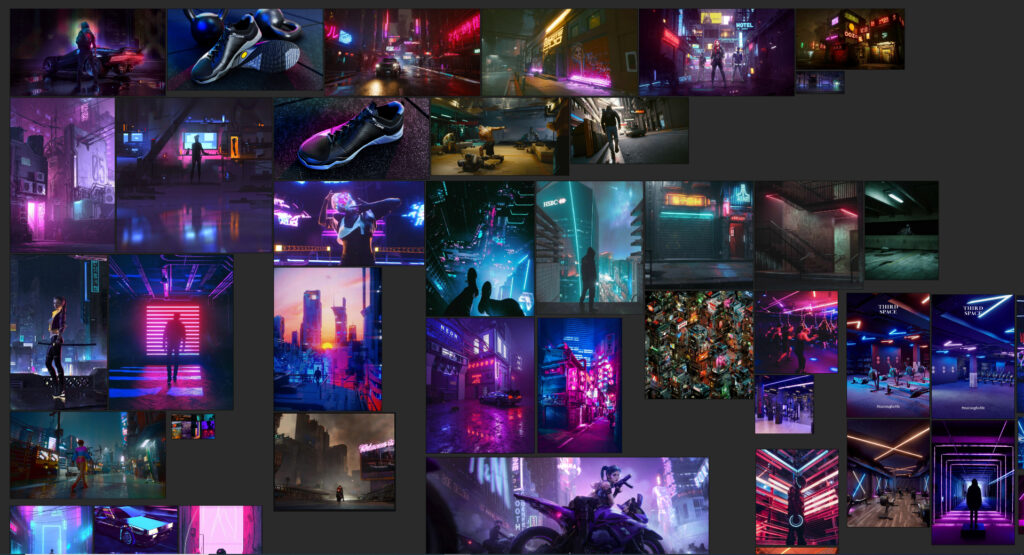

Deploying new-age software like Unreal Engine, the digital studio that excels in creating 3D assets, designs and animation, crafted an adfilm with a digital actor in a computer-generated world. We spoke to Wayug Digital Studios founder & CEO Dinesh Sehgal about how they created the imagery. He informs that their team was asked to create a fully animated music video for a shoe brand. “As per the brief the video needed to be set up around a futuristic theme with a little bit of cyberpunk style in it. The brand wanted to target the new-Age audiences and to meet this brief, the team at Wayu Digital Studio decided to go for a very game-cinematic approach and created surreal environments and focused more on cinematography.”

In the early days, render times made the process of producing CGI imagery a daunting and frustrating task. With the game ngine promising speed, artists are now able to focus more on creativity and worry less about technical snags. Sehgal informs that the very important reason for using the Unreal Engine for this video was its ability of rendering in real time. “It helped us focus more on the art rather than getting stuck in technicalities.”

The team was asked to create semi-realistic digital characters in software; a daunting task that they achieved using the MetaHuman tool in unreal engine. Meta has been ringing many bells of late with Facebook honcho Mark Zuccerberg bent on turning his vision of Metaverse into reality.

This tool, as informed by Epic Games’ Arvin Neelakantan, including others has been build towards the goal of Metaverse. Using MetaHuman creator, Sehgal informs that his team could successfully could manipulate the characters quickly and create their own version of digital actors. “It’s blueprint inside the unreal engine was quite helpful in implementing custom designed costumes for our digital actors,” he adds.

According to Sehgal, the new technologies like Unreal Engine have really empowered us in creating realistic and never seen before visuals in real-time. He cites, “For example, creating a digital actor was once considered to be just the ball game of big budget VFX films only but today It is possible for a brand to have their own digital brand ambassador and once you have one there are endless ways you can use them to engage your audience.”

With the rise of AR VR and XR, Sehgal opines that the digital content is not limited to 2D screens anymore. “The brands can actually get their audience inside their content and make them experience their product from the comfort of their living room. So, I think it is the right time for brands to step up and consider using digital platforms to create and promote their content,” he shares.

Metaverse is set to allow users to gather and play games in the virtual world. Sehgal shares that digital assets are crucial to the entire process of creating the virtual world. “Digital assets are your indestructible property. You can re-use them and modify them to create something new and immersive in the Metaverse. Explaining how assets are re-usable, he shares, “In this video, we created the entire set digitally. The whole environment is built in a real time engine, in the future if the brand wants they can create an immersive experience for their audience using the same assets. So unlike the real sets these digital sets stay forever.”

Breaking down how they went about creating assets for the ad-film, he shared, “The video had to look realistic, for that we carefully chose our camera lenses and movements for each shot.. most of the shots were rendered with wide lenses and low angles to frame the shoes properly. We also used neon elements to light our environments to give it a very stylised and futuristic look.”

Elaborating on the Metahuman aspect of it, he shared, “It was a very natural decision for us to use Metahuman simply because we needed realistic looking characters and that too in real time! but at the same time it was challenging as we had no prior experience with the tool.. We started with concept arts by painting custom clothes directly on rendered images of Metahuman and later created 3D cloths as per the design. The Metahuman blueprint inside Unreal Engine helped us in adding custom clothes to the model and it worked like a charm!”

Unlike traditional pipelines, CG ones, he informs, can be run simultaneously in Unreal Engine. For this video, the team started by collecting action references and by blocking their shots. They also used some motion-caption animation clips and mixed them to create meaningful actions. “The idea was to create multiple shots quickly to get to the first lineup of the whole music video. We already knew what kind of actions our characters would be doing so simultaneously our animation team was working on creating a library of different animation clips,” he adds.

Comparing their approach to that of game production where a set of animations is created separately and later animations are combined in the engine through blueprints, he shared that in their case they used a ‘sequencer’. He further shared, “One of the benefits of using Metahuman was that we could start our animation while our characters were being designed! That’s true!, we did all the animation on the base model of Metahuman and later assigned those animations to our final characters.”

Once the lineup was approved, the team proceeded to the stage of shot finalising. He enthusiastically explained, “This is the stage where real magic happens. We started compiling everything together in each shot since everything is real time so you don’t mind changing a little bit of set layout or the lighting to set the mood or even try to adjust the animation timing to match cut with the next shot, everything in real time.”

He compares the process to shooting films but in the virtual world. “Here once you are happy with the shot instead of saying ‘Cut’ you just press the export button,” he concluded.