Back in the early 1990’s, when the entertainment world was moving from analogue to digital, 3D Studio Max was in version 1, the original PlayStation was about to be released and I was a much younger man, my father (by more luck than judgement)stumbled across this seemingly new technology called Optical Motion Capture. Little did I know back then what an impact this stumble would have on my life and I’m pleased to say that it happily continues to do so today.

Well there you have, in one paragraph, my introduction to Motion Capture… but this isn’t about me, it’s about the technology and how it all began. I think it’s also important to define Motion Capture as a pipeline that uses a participant such as a human (or animal) to provide a template of movement that can then be translated into another medium.

Some people might say Motion Capture began way back in 1872 when Edward Muybridge (later Eadweard) began experimenting with sequential photography, more specifically taking multiple pictures of animals whilst running. Through the evolution of his research, Muybridge began to capture images of what the human eye had previously been unable to see – for example, before this technique was used no one knew that when a horse ran, at a certain point in its cycle all four legs are simultaneously off the ground! Then again, the more traditional animator may argue that Motion Capture was first put into practice in 1915 by Max Fleischer who developed the technique ‘Rotoscoping’. This technique was famously used in 1932 on the film ‘Betty Boop’s Bamboo Isle’ and this was the first time artists used Fleischer’s Rotoscope to draw over film and make a cartoon character perform and move like a human for a feature length movie. Walt Disney soon became a great practitioner of Rotoscoping and starting with ‘Snow White’ in 1937 used the method as the back bone for many of his animations. But for me and what I know to be Motion Capture (conveniently passing by a myriad of camera tracking systems which evolved after Rotoscoping) I’m going to jump to the late 1960’s/early 1970’s which is when human kinetics were first recorded.

In 1969 a group of engineers, soon to be known as Polhemus, built the first proprietary electromagnetic motion capture system which was primarily used for military research, namely the tracking of a pilot’s head movement. This system used a magnetic transmitter to produce an electromagnetic diploe field, which can vary in size depending on the source used, and created a ‘signal to noise’ pipeline when AC sensors (trackers) are placed on an object. The Polhemus trackers were capable of delivering 6 degrees of freedom which (conveniently cutting through a lot of technical explanations!) allowed software to record accurate object movement through XYZ coordinates within a calibrated space. Therefore, when these sensors were attached to a human, their movement could be recorded as 3D data and the first motion capture suit of its kind was born.

Electromagnetic systems are still very much in use today and as they are not ‘line of sight’ their tracking devices can be conveniently placed within objects; this offers users many benefits when working in controlled environments; buildings with walls and stairs or outdoors, in large open spaces. Millimetre accuracy is however an issue as data can become polluted by metal based structures and a less than thorough calibration process of facing magnetic north.

Moving into the 1980’s, pioneers of optical Motion Capture such as Motion Analysis and Oxford Metrics were formed and the technology began to forge a pipeline which is still very much relevant today. Optical Motion Capture is traditionally a line of sight solution whereby passive reflective markers (often referred to as ping-pong balls but trust me they are not!) are placed onto the joints and some segments of the body, and a ring of carefully configured cameras are calibrated to create a ‘capture volume’ in which these passive markers can be tracked. To create accurate 3D data with 6 degrees of freedom each marker must be tracked by a minimum of 3 cameras along planes relative to XYZ coordinates.

In 1989 the film ‘Don’t Touch Me’ was the first of its kind to successfully use and execute (in both production and post-production) a Motion Analysis Corp optical body capture pipeline. Throughout the 1990’s many film and game production pipelines started to include an optical solution for their characters – I’m very proud to say Centroid’s (and my) first ever job was to capture Gary Oldman’s facial performance as ‘Spider Smith’ for the movie ‘Lost In Space’. This in itself was the first time optical facial data was ever used to animate a characters face in a Hollywood movie!

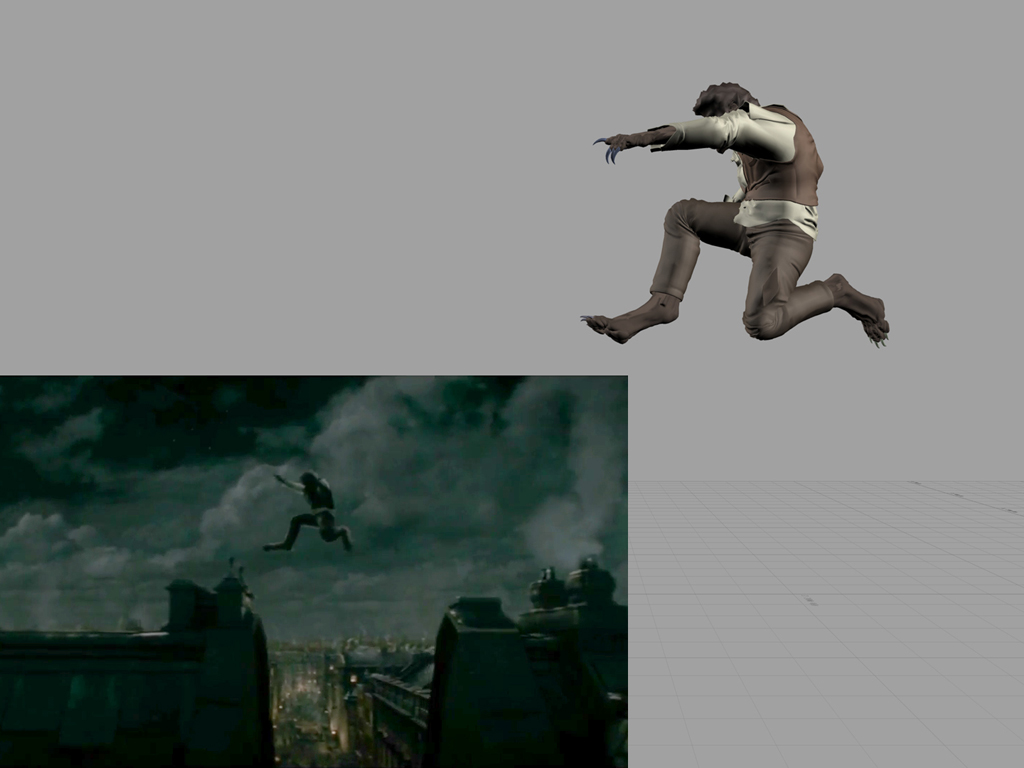

As optical pipelines continued to evolve and became a much more stable tool for creating realistic animation, Motion Capture began to move into the mainstream. Mass animation for the then ever growing console games market could be produced in a much shorter period of time and with far greater realism, as directors began to look at performers as more than just bodies in suits but people who could enrich and enhance, the look and style of their game. Starting with Titanic, James Cameron began to use Motion Capture to simulate crowds and eventually some years later pushing the technology onto multiple principle performers in Avatar. On Lord of The Rings, Peter Jackson, with Andy Serkis really embodied the technology with the digital creation of Golem and a new standard was set in the Motion capture volume. I think it would be fair to say the term ‘Performance Capture’ was used as recognition to the importance of actors and what they could bring to character animation through the capture volume. Again, through Andy Serkis’ performance as Golem the realisation that capturing an actor’s facial movement simultaneously with the body gave a director obvious benefits (as well as giving the Producer budgetary benefits!) and introduced the head mounted camera or ‘HMC’ into the pipeline, removing the limitations previously placed on facial capture by a line of sight solution.

This is really where things are today, although of course I’ve neglected to mention the fantastic Virtual Camera and how this offers everyone on both sides of the camera a real insight and moving maquette into how the animation will look once it’s been post produced. Both optical and magnetic pipelines are used ‘on set’, enabling live action and CG characters to interact in the real world so performances can be maximised to the best result. Not all optical solutions use passive markers, in fact LED’s were preferred on many recent movie productions as they give the cameras a greater depth of field which in turn allows technicians to build bigger performance areas for actors to work in.

There are many systems, pipelines, actors and practitioners which I’ve neglected to mention in this article and for this I apologise, but it has been my aim to give a general overview of the industry as I know it, rather than an in depth technical history. It is in this vein and as a conclusion to where we at Centroid currently are that I would like to give a final nod to the system I know best and its latest camera incarnation: The Motion Analysis Raptor. I’d like to somewhat excitedly point out that these optical cameras can be configured outside, on terrains or wherever a location unit might desire (weather conditions permitting of course), bringing virtual capabilities ever closer to that of ‘live action’ and it is for this reason I feel that although I’m now a veteran of this industry, I know there is still so much more to come!

(These are purely personal views of Centroid Motion Capture CEO Philip Stilgoe)