NVIDIA has announced in their keynote a number of new products that will see a new generation of GPUs and AI Solutions to enter the market alongside the exiting DGX Family line up. Amid the ongoing COVID-19 lockdown during which GTC San Jose was cancelled earlier this year. These announcements mark the latest in a wave of innovation and expansion powered by NVIDIA, after the purchase of Mellanox Technologies last year and Cumulus announced just this week.

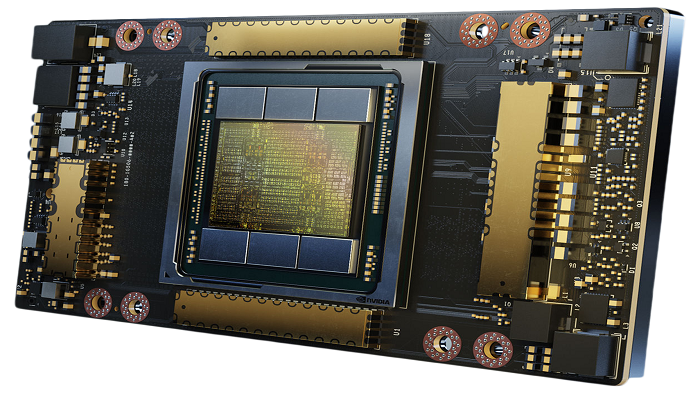

NVIDIA A100 GPU

The NVIDIA A100 GPU delivers unparalleled acceleration at every scale for AI, data analytics, and high-performance computing (HPC) to tackle the world’s toughest computing challenges! The A100 is the engine of the NVIDIA data center platform; and with A100 you can efficiently scale to thousands of GPUs thanks to new Multi-Instance GPU for Elastic GPU Computing (MIG)! The A100 GPU offers 7x higher throughput than V100 and with simultaneous instances per GPU, you have the flexibility to run GPUs independently or as a whole.

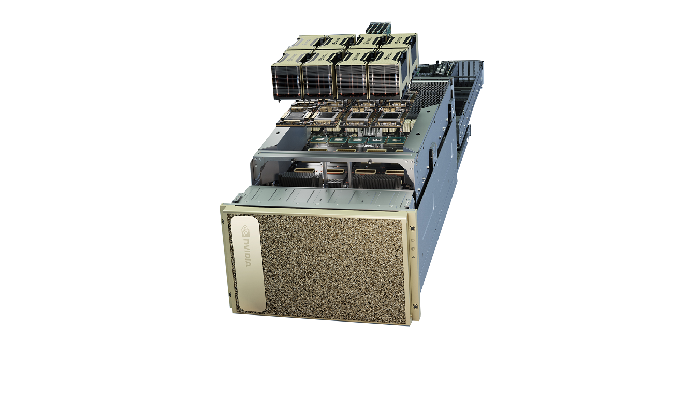

NVIDIA DGX A100:

NVIDIA DGX A100 is the universal system for all AI workloads. It integrates eight of the world’s most advanced NVIDIA A100 Tensor Core GPUs, delivering the very first five petaFLOPS AI system. Now enterprises can create a complete workflow from data preparation and analytics to training and inference using one easy-to-deploy AI infrastructure. NVIDIA sees the DGX A100 fitting into the existing line up of DGX systems and the wider NVIDIA product catalogue from the idea to the office, datacentre, edge and results. Combined with innovative GPU-optimised software and simplified management tools, these fully-integrated systems are designed to give data scientists the most powerful tools for AI development—from the office to the data center.

AI development journey progresses, each of the solutions enables the effortless mobility of most important work from one system to the next, without changing any code along the way, so that one can right-size resources for the task at hand. NVIDIA DGX A100 unifies all of the AI workloads into a consolidated system with optimised software that is the foundational building block for AI infrastructure. DGX A100 further lowers TCO not only by offering the highest performance but also from improved infrastructure utilisation with the flexibility to handle multiple, parallel workloads by multiple users.

Supermicro HGX A100 platforms:

Boston is a partner of Supermicro who has also announced three servers featuirng NVIDIA A100 GPUs. The three servers offer choices for those looking to run AI compute, model training, deep learning and High-Performance Computing workloads in three different form factors. The expected 4U HGX A100 (SYS-420GP-TNAR) features 8x SXM4 A100 GPUs and offers Titanium-level efficiency whilst the AS -4124GS-TNR also offering 8x GPUs but in PCIe 4.0 form factor, but the most interesting of the three servers is a compact, yet high-density 2U 4x A100 SXM4 server that takes advantage of PCIe 4.0 thanks to dual AMD EPYC 7002 Series CPUs – this really is a benchmark busting solution that gives those who aren’t ready to scale to the 8x GPU solution that the DGX A100 and HGX A100 versions offer.